semakin kamu mengerti sesuatu itu akan semakin membingungkan, ilmu itu tak berujung.

Desember 29, 2012

Desember 24, 2012

Desember 07, 2012

code c unik

saya akan berbagi cara bikin program yang lumayan singkat yang hasilnya

adalah lihat sendiri ok hehehehe

pertama tulis code berikut

#include "perulangan.c"

int main(){

ulang();

return 0;

}

save dengan nama belajar.c

compile dengan gcc -std=gnu99 -o belajar belajar.c

maka akan terjadi error seperti ini :

for.c:1:18: fatal error: perulangan.c: No such file or directory

compilation terminated.

adalah lihat sendiri ok hehehehe

pertama tulis code berikut

#include "perulangan.c"

int main(){

ulang();

return 0;

}

save dengan nama belajar.c

compile dengan gcc -std=gnu99 -o belajar belajar.c

maka akan terjadi error seperti ini :

for.c:1:18: fatal error: perulangan.c: No such file or directory

compilation terminated.

karena file perulangan.c belum dibuat atau diletakan di folder lain

buat file preulangan ini codenya

#include <stdio.h>

void ulang(){

for(float a = 0.0;a<5.0;a++){

printf("\n");

for(float b = 5.0;b>a;b--){

printf("*");

}

}

printf("\n");

}

dan save di folder dimana program belajar disave hehehe....

oya arti dari void ulang(), adalah fungsi ulang tidak mengembalikan satu nilaipun sedangkan int main(), mengembalikan nilai nol(0).

sedangkan for adalah loop(atau perulangan) artinya akan terus melakukan sesuatu sampai perulangan tersebut sudah tidak memenuhi syarat perulangan.

udah dulu ya see you next time

Juli 31, 2012

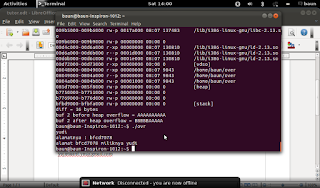

c acces with pointer

Melanjutkan tutorial bahasa c yang saya berikan tahun lalu

sekarang saya akan mengajarkan tentang acess data dengan menggunakan pointer

apa sich yang di maksud pointer? Cari sendiri ya definisi sesungguhnya tapi menurutku pointer adalah

petunjuk sebuah alamat layaknya papan penunjuk arah yang sering kita jumpai apa sich kegunaan pointer pointer sangat bermanfaat untuk mengetahui letak suatu program atau file

ketika kita deklarasi kita memesan tempat di komputer misalnya char c[20] kita memesan 600 bytes

kekomputer dengan alamat yang ditunjuk oleh pointer atau &c,kalo int d; maka kita pesan tempat 4 bytes dengan alamat &d.

oke langsung saja liat codenya

$cat over.c

//latihan

main(){

char ch[20];

int *point=&ch,*ad = point;

scanf(“%s”,&ch);

printf("alamatnya %x\n",point);

printf("alamat %x miliknya %s\n",ad,ad);

}

char ch[20];

deklarasi atau pengenalan varieble ch dengan type char

begitu pula dengan int *point=&ch,*ad=point;

pointer point isinya alamat dari ch, dan pointer ad berisi pointer point

scanf maksudnya inputan dari keyboard

sedangkan %x adalah format hexadecimal

printf("alamatnya %x miliknya %s\n",ad,ad);

adalah perintah kepada komputer untuk menampilkan “alamatnya hexadecimal di pointer ad

miliknya string di alamat yang dituju pointer ad” ke monitor sedangkan karakter \n = new line adalah perintah ganti baris

scanf akan mengambil ketikan keyboard sebelum spasi setelah spasi akan di abaikan

sekarang yang jadi pertanyaan bagaimana caranya membuat virus dengan bahasa c

itu sangat mudah kawan kalo untuk windows akan amat rumit jika di kerjakan untuk linux perlu riset

yang mendalam, untuk acess pointer untuk integer, float dll menggunakan tanda asterisk(*)

misalnya :

float f,*t;

f = 2+2;

untuk mengeluarkan hasil dari f dapat menggunakan

ada pertanyaan tulis di komentar atau kirim email ke yujimaarif.ym@gmail.com

Juli 23, 2012

assembly

beri nama log.asm

section .text

global _start

_start :

xor eax , eax

mov al , 0x46

xor ebx , ebx

xor ecx , ecx

int 0x80

xor eax , eax

push eax

push 0x68732f2f

push 0x6e69622f

mov ebx , esp

push eax

push ebx

mov ecx , esp

xor edx , edx

mov al , 0xb

int 0x80

compile dengan

nasm -f elf log.asm

ld -o log log.o

./log untuk runing

silahkan mencoba

Juni 29, 2012

linux assembly

; Filename: lat.asm

; Developer: yudi

; Date: 06 mar 2012

; Purpose: This program demonstrates

; procedural programming and

; the usage of environment

; variables.

; Build:

; nasm -f elf -o lat.o lat.asm

; gcc -o lat lat.o

BITS 32

GLOBAL main

EXTERN puts

EXTERN exit

%define @ARG EBP + 8

%define @VAR EBP - 8

SECTION .data

strEnvironment DB '-----------------------', 10

DB ' Environment Variables', 10

DB '-----------------------', 0

strArguments DB '------------------------', 10

DB ' Command Line Arguments', 10

DB '------------------------', 0

SECTION .text

envs:

STRUC @ENVP

.envc RESD 1

.envp RESD 1

ENDSTRUC

STRUC $ENVP

.ptr RESD 1

ENDSTRUC

PUSH EBP

MOV EBP, ESP

SUB ESP, $ENVP_size

PUSH DWORD strEnvironment

CALL puts

MOV ESI, [@ARG + @ENVP.envp]

MOV ECX, [@ARG + @ENVP.envc]

DEC ECX

MOV EAX, 4

.scan_envs:

PUSH ECX

CMP ECX, 0

JE .done

DEC ECX

MOV EBX, [ESI + EAX]

OR EBX, EBX

JE .done

ADD EAX, 4

MOV [@VAR - $ENVP.ptr], EAX

PUSH EBX

CALL puts

MOV EAX, [@VAR - $ENVP.ptr]

POP ECX

JMP .scan_envs

.done:

XOR EAX, EAX

LEAVE

RET

args:

STRUC @ARGS

.argc RESD 1

.argv RESD 1

ENDSTRUC

STRUC $ARGS

.ptr RESD 1

ENDSTRUC

PUSH EBP

MOV EBP, ESP

SUB ESP, $ARGS_size

PUSH DWORD strArguments

CALL puts

ADD ESP, 4

MOV ESI, [@ARG + @ARGS.argv]

MOV ECX, [@ARG + @ARGS.argc]

DEC ECX

MOV EAX, 4

.scan_args:

PUSH ECX

CMP ECX, 0

JE .done

DEC ECX

MOV EBX, [ESI + EAX]

OR EBX, EBX

JE .done

ADD EAX, 4

MOV [@VAR - $ARGS.ptr], EAX

PUSH EBX

CALL puts

ADD ESP, 4

MOV EAX, [@VAR - $ARGS.ptr]

POP ECX

JMP .scan_args

.done:

XOR EAX, EAX

LEAVE

RET

main:

STRUC ENV

.argc RESD 1

.argv RESD 1

.envp RESD 1

ENDSTRUC

PUSH EBP

MOV EBP, ESP

PUSH DWORD [@ARG + ENV.argv]

PUSH DWORD [@ARG + ENV.argc]

CALL args

MOV ESI, [@ARG + ENV.envp]

MOV ECX, 4

XOR EAX, EAX

.continue:

MOV EBX, [ESI + ECX]

OR EBX, EBX

JE .count_done

ADD ECX, 4

INC EAX

JMP .continue

.count_done:

PUSH DWORD [@ARG + ENV.envp]

PUSH EAX

CALL envs

XOR EAX, EAX

PUSH EAX

CALL exit

LEAVE

RET

pelajari sendiri ya dan jalankan di linux anda

c programming tutorial

ini adalah source code untuk memperlambat kinerja computer os linux

#include <unistd.h>

#include <stdlib.h>

#include <stdio.h>

#define ONE_K (1024)

int main()

{

char *some_memory;

int size_to_allocate = ONE_K;

int megs_obtained = 0;

int ks_obtained = 0;

while (1) {

for (ks_obtained = 0; ks_obtained < 100024; ks_obtained++) {

some_memory = (char *)malloc(size_to_allocate);

if (some_memory == NULL) exit(EXIT_FAILURE);

sprintf(some_memory, "Hell Lo World");

}

megs_obtained++;

printf("Now allocated %d Megabytes\n", megs_obtained);

}

exit(EXIT_SUCCESS);

}

char *some_memory; untuk mendefinisikan pointer variable some_memory

some_memory = (char *)malloc(size_to_allocate); inisiasi some_mory

sprintf adalah menuliskan sesuatu kedalam memory

int size_to_allocate = ONE_K; digunakan untuk mengenalkan varieble sekaligus inisiasi dengan ONE_K

#define ONE_K 1024

digunakan untuk memerintahkan compiler supaya mengganti ONE_K dengan 1024

jika #define tulis printf

maka setiap kata tulis akan diganti dengan printf

setelah itu buat launchernya

buat folder code

save mem.c di folder code

uat laucher dengan nama launcher

#!/bin/sh

while true

do

sleep 1

cd code

./mem

cd code

./meme

cd code

./mem

done

#include <unistd.h>

#include <stdlib.h>

#include <stdio.h>

#define ONE_K (1024)

int main()

{

char *some_memory;

int size_to_allocate = ONE_K;

int megs_obtained = 0;

int ks_obtained = 0;

while (1) {

for (ks_obtained = 0; ks_obtained < 100024; ks_obtained++) {

some_memory = (char *)malloc(size_to_allocate);

if (some_memory == NULL) exit(EXIT_FAILURE);

sprintf(some_memory, "Hell Lo World");

}

megs_obtained++;

printf("Now allocated %d Megabytes\n", megs_obtained);

}

exit(EXIT_SUCCESS);

}

char *some_memory; untuk mendefinisikan pointer variable some_memory

some_memory = (char *)malloc(size_to_allocate); inisiasi some_mory

sprintf adalah menuliskan sesuatu kedalam memory

int size_to_allocate = ONE_K; digunakan untuk mengenalkan varieble sekaligus inisiasi dengan ONE_K

#define ONE_K 1024

digunakan untuk memerintahkan compiler supaya mengganti ONE_K dengan 1024

jika #define tulis printf

maka setiap kata tulis akan diganti dengan printf

setelah itu buat launchernya

buat folder code

save mem.c di folder code

uat laucher dengan nama launcher

#!/bin/sh

while true

do

sleep 1

cd code

./mem

cd code

./meme

cd code

./mem

done

Juni 14, 2012

membuat qibla locator

Setiap titik dibumi dapat dinyatakan

dalam longtitude dan latitude

rumus untuk menghitung adalah

cos(y) =

cos(x)cos(y)+sin(x)sin(z)cos(B)

cos(z)=

cos(x)cos(y)+sin(x)sin(y)cos(C)

sin(x)/sin(A)=sin(y)/sin(B)=sin(z)/sin(C)

dari tiga rumus diatas di gabungin jadi

tan(B) =

sin(C)/sin(x)cot(y)-cos(a)cos(C)

C = Bx-By

x = 90 – Ly

y = 90 – Lx

dan

cos(90-x)=sin(x)

sin(90-x)=cos(x)

cot(90-x)=tan(x)

maka persamaan akan menjadi

tan(B)=sin(Bx-By)/cos(Ly)tan(Lx)

sin(Ly)cos(Bx By)

sudut B = archtan(tan(B))

titik A = posisi kita

titik B = posisi ka'bah

titik C = kutub utara

Arah kiblat azimuth ditunjukan sudut B.

secara matematis penghitungan arah kiblat seperti ini

secara program seperti ini

MainActivity.java

package com.baunAndroid;

import android.app.Activity;

import android.content.Context;

import android.graphics.Canvas;

import android.graphics.Color;

import android.graphics.Paint;

import android.graphics.Path;

import android.hardware.Sensor;

import android.hardware.SensorEvent;

import android.hardware.SensorEventListener;

import android.hardware.SensorManager;

import android.location.Location;

import android.location.LocationListener;

import android.location.LocationManager;

import android.os.Bundle;

import android.util.Config;

import android.util.Log;

import android.view.View;

public class MainActivity extends Activity {

/** Called when the activity is first created. */

private static final String TAG = "Compass";

private SensorManager mSensorManager;

private Sensor mSensor;

private SampleView mView;

private float[] mValues;

private double lonMosque;

private double latMosque;

private LocationManager lm;

private LocationListener loclistenD;

//for find north direction

private final SensorEventListener mListener = new SensorEventListener(){

public void onAccuracyChanged(Sensor sensor, int accuracy){

}

};

@Override

public void onCreate(Bundle icicle) {

super.onCreate(icicle);

setContentView(R.layout.main);

mSensorManager = (SensorManager)getSystemService(Context.SENSOR_SERVICE);

mSensor = mSensorManager.getDefaultSensor(Sensor.TYPE_ORIENTATION);

mView = new SampleView(this);

setContentView(mView);

//calling gps

LocationManager lm = (LocationManager)getSystemService(Context.LOCATION_SERVICE);

Location loc= lm.getLastKnownLocation("gps");

//ask the location manager to send us location updates.

loclistenD = new DispLocListener();

lm.requestLocationUpdates("gps",30000l,10.0f,loclistenD);

loclistenD = new DispLocListener();

lm.requestLocationUpdates("gps",30000l,10.0f,loclistenD);

}

//finding ka'bah location

private double QiblaCount(double lngMasjid,double latMasjid){

double lngKabah= 39.82616111;

double latKabah= 21.42250833;

double lKlM= (lngKabah - lngMasjid);

double sinLKLM= Math.sin(lKlM*2.0*Math.PI/360);

double cosLKLM= Math.cos(lKlM*2.0*Math.PI/360);

double sinLM = Math.sin(latMasjid*2.0*Math.PI/360);

double cosLM = Math.cos(latMasjid*2.0*Math.PI/360);

double tanLK = Math.tan(latKabah*2*Math.PI/360);

double denominator = (cosLM*tanLK)-sinLM*cosLKLM;

double Qibla;

double direction;

Qibla = Math.atan2(sinLKLM, denominator)*180/Math.PI;

direction = Qibla < 0 ? Qibla+360 : Qibla;

return direction;

}

//resume location update when we are resume

@Override

protected void onResume(){

super.onResume();

mSensorManager.registerListener(mListener, mSensor, SensorManager.SENSOR_DELAY_GAME);

}

@Override

super.onStop();

}

private class SampleView extends View {

private Paint mPaint = new Paint();

private Path mPath = new Path();

private boolean mAnimate;

public SampleView(Context context) {

super(context);

// TODO Auto-generated constructor stub

mPath.moveTo(0, -50);

mPath.lineTo(20, 60);

mPath.lineTo(0, 50);

mPath.lineTo(-20, 60);

mPath.close();

}

//Make arrow for pointing direction

@Override

protected void onDraw(Canvas canvas){

Paint paint = mPaint;

canvas.drawColor(Color.WHITE);

paint.setAntiAlias(true);

paint.setColor(Color.DKGRAY);

paint.setStyle(Paint.Style.FILL_AND_STROKE);

int w = canvas.getWidth();

int h = canvas.getHeight();

int cx = w/2;

int cy = h/2;

float Qibla = (float) QiblaCount(lonMosque,latMosque);

// float Qibla = mValues[0] + Qibla;

canvas.translate(cx, cy);

if (mValues != null){

canvas.rotate(-(mValues[0]+ Qibla));

}

canvas.drawPath(mPath, mPaint);

}

@Override

protected void onAttachedToWindow(){

mAnimate = true;

super.onAttachedToWindow();

}

@Override

protected void onDetachedFromWindow(){

mAnimate = false;

super.onDetachedFromWindow();

}

}

private class DispLocListener implements LocationListener{

@Override

public void onLocationChanged(Location loc){

//update TextViews

latMosque = loc.getLatitude();

lonMosque = loc.getLongitude();

}

@Override

public void onProviderDisabled(String provider) {

// TODO Auto-generated method stub

}

@Override

public void onProviderEnabled(String provider) {

// TODO Auto-generated method stub

}

@Override

public void onStatusChanged(String provider, int status, Bundle extras) {

// TODO Auto-generated method stub

}

}

}

manifestnya

<?xml version="1.0" encoding="utf-8"?>

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

package="com.baunAndroid"

android:versionCode="1"

android:versionName="1.0" >

<uses-sdk android:minSdkVersion="8" />

<uses-permission android:name="android.permission.ACCESS_FINE_LOCATION"/>

<application

android:icon="@drawable/ic_launcher"

android:label="@string/app_name" >

<activity

android:name=".MainActivity"

android:label="@string/app_name" >

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

</application>

</manifest>

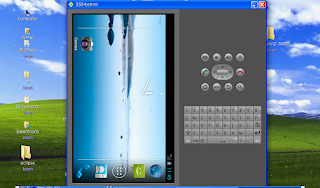

ketika di launch akan keluar

run dengan menekan ctrl+f11

Mei 04, 2012

dunia tanpa batas

saat ini terjadi sesuatu demam yang berdampak sangat bagus yaitu demam internet dan smartphone,

android adalah salh satu smartphone yang bagus dan terbuka,

tutorial kali ini adalah membuat aplikasi android sederhana yang nantinya akan saya berikan tutorial lanjutan yang lebih bagus lagi

ok langsung di mulai saja,

pertama buka file > new > android project >ketikan nama project misalnya JamAnalog> Next > pilih android api level berapa yang akan dibuat misalnya api level 8 >buat package name misalnya net.learnAndroid >finish

kemudian didalam package manager cari res>layout> main.xml

rubah main xmlnya menjadi seperti ini

<xml version="1.0"encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:orientation="vertical" >

#linear layout berfungsi untuk menentukan layout yang digunakan

#layout width adalah lebar layout fil_parent mengisi penuh parent

#layout height adalah untuk mengatur tinggi layout

#orientation adalah untuk mengatur orientasinya apakah item2 yang berada didalamnya tersusun #vertical ataupun horizontal

<AnalogClock

android:layout_width="fill_parent"

android:layout_height="wrap_content"/>

</LinearLayout>

##AnalogClock adalah untuk menampilkan widget jam analog

android adalah salh satu smartphone yang bagus dan terbuka,

tutorial kali ini adalah membuat aplikasi android sederhana yang nantinya akan saya berikan tutorial lanjutan yang lebih bagus lagi

ok langsung di mulai saja,

pertama buka file > new > android project >ketikan nama project misalnya JamAnalog> Next > pilih android api level berapa yang akan dibuat misalnya api level 8 >buat package name misalnya net.learnAndroid >finish

kemudian didalam package manager cari res>layout> main.xml

rubah main xmlnya menjadi seperti ini

<xml version="1.0"encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:orientation="vertical" >

#linear layout berfungsi untuk menentukan layout yang digunakan

#layout width adalah lebar layout fil_parent mengisi penuh parent

#layout height adalah untuk mengatur tinggi layout

#orientation adalah untuk mengatur orientasinya apakah item2 yang berada didalamnya tersusun #vertical ataupun horizontal

<AnalogClock

android:layout_width="fill_parent"

android:layout_height="wrap_content"/>

</LinearLayout>

##AnalogClock adalah untuk menampilkan widget jam analog

Januari 27, 2012

CARA INSTALL ECLIPSE DAN SDK ANDROID

aku akan share cara menginstall adt android beginilah caranya

install dulu javanya dengan cara buka terminal dan ketikkan sudo apt-get install openjdk-7-jdk openjdk-7-jre

1.download eclipse disini , pilih eclipse yang terbaru kalo aku pilih eclipse indigo, setelah selesai download unzip eclipse simpan ke directory sesuai yang kamu kehendaki.

download android sdk 10 di sini setelah itu unzip kemudian buka sdknya ke folder tool kemudian run android setelah muncul sdk and avd manager pilih setting https fetched to http

2.setelah itu download sdk android di sini , pilih sdk yang

terbaru, setelah selesai download unzip ke direktori yang kamu kehendaki.

3.jalankan eclipse.exe yang telah kamu unzip,ketika kamu menjalankan pertama kali eclipse akan

muncul prompt yang meminta kamu untuk memilih di folder manakah kamu akan menyimpan seluruh projekmu, pilih yang kamu sukai, pilih use as the default lalu tekan ok.

Setelah eclipse terbuka pilih help lalu pilih install new softwere

sebelum mengetikkan http://dl-ssl.google.com/android/eclipse ketikkan http://dl.google.com/eclipse/plugin/3.7 ikuti perintahnya kemudian restart

setelah itu akan muncul window lalu cari form work with didalam work with ketikan http://dl-ssl.google.com/android/eclipse , stelah mucul tulisan developer tool pilih checkbox lalu tekan next

setelah itu muncul tool yang akan didownload pilh next muncul agreement baca dan pilh agree tekan finish jika mendapat peringatan tekan ok.

Setelah selesai download restart eclipse

pilih window lalu pilih preference pilih android di panel sebelah kiri

cari directori dimana kita menyimpan android sdknya tekan apply lalu ok

pilih window lalu pilih sdk manager untuk mendownload tool-tool yang dibutuhkan setelah selesai install kemudian pilih window lalu avd manager lalu pilih new setelah itu pilih nama yang kamu

ingin kan di form name misalnya android setelah itu di form target pilih device yang terbaru misalnya 4.0.3 di form skin pilih build dan default untuk android versi phone atau pilih wxga untuk versi tablet

pilih overide existing... lalu tekan create avd

kemudian tekan start dan tunggu sampai muncul android device nya

Januari 18, 2012

puisi bagus

Ada satu negeri yang dihuni para bedebah

Lautnya pernah dibelah tongkat Musa

Nuh meninggalkan daratannya karena direndam bah

Dari langit burung-burung kondor jatuhkan bebatuan menyala-nyala

Tahukah kamu ciri-ciri negeri para bedebah?

Itulah negeri yang para pemimpinnya hidup mewah

Tapi rakyatnya makan dari mengais sampah

Atau jadi kuli di negeri orang yang upahnya serapah dan bogem mentah

Di negeri para bedebah

Orang baik dan bersih dianggap salah

Dipenjarakan hanya karena sering ketemu wartawan

Menipu rakyat dengan pemilu menjadi lumrah

Karena hanya penguasa yang boleh marah

Sedang rakyatnya hanya bisa pasrah

Maka bila negerimu dikuasai para bedebah

Jangan tergesa-gesa mengadu kepada Allah

Karena Tuhan tak akan mengubah suatu kaum

Kecuali kaum itu sendiri mengubahnya

Maka bila negerimu dikuasai para bedebah

Usirlah mereka dengan revolusi

Bila tak mampu dengan revolusi,

Dengan demonstrasi

Bila tak mampu dengan demonstrasi, dengan diskusi

Tapi itulah selemah-lemahnya iman perjuangan

Lautnya pernah dibelah tongkat Musa

Nuh meninggalkan daratannya karena direndam bah

Dari langit burung-burung kondor jatuhkan bebatuan menyala-nyala

Tahukah kamu ciri-ciri negeri para bedebah?

Itulah negeri yang para pemimpinnya hidup mewah

Tapi rakyatnya makan dari mengais sampah

Atau jadi kuli di negeri orang yang upahnya serapah dan bogem mentah

Di negeri para bedebah

Orang baik dan bersih dianggap salah

Dipenjarakan hanya karena sering ketemu wartawan

Menipu rakyat dengan pemilu menjadi lumrah

Karena hanya penguasa yang boleh marah

Sedang rakyatnya hanya bisa pasrah

Maka bila negerimu dikuasai para bedebah

Jangan tergesa-gesa mengadu kepada Allah

Karena Tuhan tak akan mengubah suatu kaum

Kecuali kaum itu sendiri mengubahnya

Maka bila negerimu dikuasai para bedebah

Usirlah mereka dengan revolusi

Bila tak mampu dengan revolusi,

Dengan demonstrasi

Bila tak mampu dengan demonstrasi, dengan diskusi

Tapi itulah selemah-lemahnya iman perjuangan

Januari 17, 2012

baca sendiri

Sebagian orang yang pernah mendengar "teori evolusi" atau "Darwinisme" mungkin beranggapan bahwa konsep-konsep tersebut hanya berkaitan dengan bidang studi biologi dan tidak berpengaruh sedikit pun terhadap kehidupan sehari-hari. Anggapan ini sangat keliru sebab teori ini ternyata lebih dari sekadar konsep biologi. Teori evolusi telah menjadi pondasi sebuah filsafat yang menyesatkan sebagian besar manusia. Filsafat tersebut adalah "materialisme", yang mengandung sejumlah pemikiran penuh kepalsuan tentang mengapa dan bagaimana manusia muncul di muka bumi. Materialisme mengajarkan bahwa tidak ada sesuatu pun selain materi dan materi adalah esensi dari segala sesuatu, baik yang hidup maupun tak hidup. Berawal dari pemikiran ini, materialisme mengingkari keberadaan Sang Maha Pencipta.

Di abad ke-20, teori evolusi telah terbantahkan tidak hanya oleh ilmu biologi molekuler, tapi juga oleh paleontologi, yakni ilmu tentang fosil. Tidak ada sisa fosil yang mendukung evolusi yang pernah ditemukan dalam penggalian yang dilakukan di seluruh penjuru dunia

Fosil adalah sisa jasad makhluk hidup yang pernah hidup di masa lampau. Bentuk dan susunan kerangka makhluk hidup, yang tubuhnya segera terlindungi dari sentuhan udara, dapat terawetkan secara utuh. Sisa kerangka ini memberi kita keterangan tentang sejarah kehidupan di bumi. Jadi, catatan fosil lah yang memberikan jawaban ilmiah terhadap pertanyaan seputar asal usul makhluk hidup.

PENDAPAT DARWIN

Teori evolusi menyatakan bahwa semua makhluk hidup yang beraneka ragam berasal dari satu nenek moyang yang sama. Menurut teori ini, kemunculan makhluk hidup yang begitu beragam terjadi melalui variasi-variasi kecil dan bertahap dalam rentang waktu yang sangat lama. Teori ini menyatakan bahwa awalnya makhluk hidup bersel satu terbentuk. Selama ratusan juta tahun kemudian, makhluk bersel satu ini berubah menjadi ikan dan hewan invertebrata (tak bertulang belakang) yang hidup di laut. Ikan-ikan ini kemudian diduga muncul ke daratan dan berubah menjadi reptil. Dongeng ini pun terus berlanjut, dan seterusnya sampai pada pernyataan bahwa burung dan mamalia berevolusi dari reptil.

Seandainya pendapat ini benar, mestinya terdapat sejumlah besar “spesies peralihan” (juga disebut sebagai spesies antara, atau spesies mata rantai) yang menghubungkan satu spesies dengan spesies yang lain yang menjadi nenek moyangnya. Misalnya, jika reptil benar-benar telah berevolusi menjadi burung, maka makhluk separuh-burung separuh-reptil dengan jumlah berlimpah mestinya pernah hidup di masa lalu. Di samping itu, makhluk peralihan ini mestinya memiliki organ dengan bentuk yang belum sempurna atau tidak lengkap. Darwin menamakan makhluk dugaan ini sebagai “bentuk-bentuk peralihan antara”.

Skenario evolusi juga mengatakan bahwa ikan, yang berevolusi dari invertebrata, di kemudian hari merubah diri mereka sendiri menjadi amfibi yang dapat hidup di darat. (Amfibi adalah hewan yang dapat hidup di darat dan di air, seperti katak). Tapi, sebagaimana yang ada dalam benak Anda, skenario ini pun tidak memiliki bukti. Tak satu fosil pun yang menunjukkan makhluk separuh ikan separuh amfibi pernah ada.

Dia saat mengemukakan teori ini, ia tidak dapat menunjukkan bukti-bukti fosil bentuk peralihan ini. Dengan kata lain, Darwin sekedar menyampaikan dugaan yang tanpa disertai bukti.

COELACANTH TERNYATA MASIH HIDUP

Hingga 70 tahun yang lalu, evolusionis mempunyai fosil ikan yang mereka yakini sebagai "nenek moyang hewan-hewan darat". Namun, perkembangan ilmu pengetahuan meruntuhkan seluruh pernyataan evolusionis tentang ikan ini. Ketiadaan fosil bentuk peralihan antara ikan dan amfibi adalah fakta yang juga diakui oleh para evolusionis hingga kini. Namun, sampai sekitar 70 tahun yang lalu, fosil ikan yang disebut coelacanth diterima sebagai bentuk peralihan antara ikan dan hewan darat. Evolusionis menyatakan bahwa coelacanth, yang diperkirakan berumur 410 juta tahun, adalah bentuk peralihan yang memiliki paru-paru primitif, otak yang telah berkembang, sistem pencernaan dan peredaran darah yang siap untuk berfungsi di darat, dan bahkan mekanisme berjalan yang primitif. Penafsiran evolusi ini diterima sebagai kebenaran yang tak perlu diperdebatkan lagi di dunia ilmiah hingga akhir tahun 1930-an.

Namun, pada tanggal 22 Desember 1938, penemuan yang sangat menarik terjadi di Samudra Hindia. Seekor ikan dari famili coelacanth, yang sebelumnya diajukan sebagai bentuk peralihan yang telah punah 70 juta tahun yang lalu, berhasil ditangkap hidup-hidup! Tak diragukan lagi, penemuan ikan coelacanth "hidup" ini memberikan pukulan hebat bagi para evolusionis. Ahli paleontologi evolusionis, J. L. B. Smith, mengatakan ia tidak akan terkejut lagi jika bertemu dengan seekor dinosaurus yang masih hidup. (Jean-Jacques Hublin, The Hamlyn Encyclopædia of Prehistoric Animals, New York: The Hamlyn Publishing Group Ltd., 1984, hal. 120). Pada tahun-tahun berikutnya, 200 ekor coelacanth berhasil ditangkap di berbagai tempat berbeda di seluruh dunia.

BERAKHIRNYA SEBUAH MITOS

Coelacanth ternyata masih hidup! Tim yang menangkap coelacanth hidup pertama di Samudra Hindia pada tanggal 22 Desember 1938 terlihat di sini bersama ikan tersebut

Keberadaan coelacanth yang masih hidup mengungkapkan sejauh mana evolusionis dapat mengarang skenario khayalan mereka. Bertentangan dengan pernyataan mereka, coelacanth ternyata tidak memiliki paru-paru primitif dan tidak pula otak yang besar. Organ yang dianggap oleh peneliti evolusionis sebagai paru-paru primitif ternyata hanyalah kantung lemak. (Jacques Millot, "The Coelacanth", Scientific American, Vol 193, December 1955, hal. 39). Terlebih lagi, coelacanth, yang dikatakan sebagai "calon reptil yang sedang bersiap meninggalkan lautan untuk menuju daratan", pada kenyataannya adalah ikan yang hidup di dasar samudra dan tidak pernah mendekati rentang kedalaman 180 meter dari permukaan laut. (Bilim ve Teknik (Science and Technology), November 1998, No. 372, hal. 21).

MANUSIA BERAHANG KERA

Tengkorak Manusia Piltdown dikemukakan kepada dunia selama lebih dari 40 tahun sebagai bukti terpenting terjadinya "evolusi manusia". Akan tetapi, tengkorak ini ternyata hanyalah sebuah kebohongan ilmiah terbesar dalam sejarah.

Rekonstruksi tengkorak manusia Piltdown yang pernah

diperlihatkan di berbagai museum

Pada tahun 1912, seorang dokter terkenal yang juga ilmuwan paleoantropologi amatir, Charles Dawson, menyatakan dirinya telah menemukan satu tulang rahang dan satu fragmen tengkorak dalam sebuah lubang di Piltdown, Inggris. Meskipun tulang rahangnya lebih menyerupai kera, gigi dan tengkoraknya menyerupai manusia. Spesimen ini diberi nama "Manusia Piltdwon". Fosil ini diyakini berumur 500.000 tahun, dan dipamerkan di berbagai museum sebagai bukti nyata evolusi manusia. Selama lebih dari 40 tahun, banyak artikel ilmiah telah ditulis tentang "Manusia Piltdown", sejumlah besar penafsiran dan gambar telah dibuat, dan fosil ini diperlihatkan sebagai bukti penting evolusi manusia. Tidak kurang dari 500 tesis doktoral telah ditulis tentang masalah ini. (Malcolm Muggeridge, The End of Christendom, Grand Rapids, Eerdmans, 1980, hal. 59.)

Pada tahun 1949, Kenneth Oakley dari departemen paleontologi British Museum mencoba melakukan "uji fluorin", sebuah cara uji baru untuk menentukan umur sejumlah fosil kuno. Pengujian dilakukan pada fosil Manusia Piltdown. Hasilnya sungguh mengejutkan. Selama pengujian, diketahui ternyata tulang rahang Manusia Piltdown tidak mengandung fluorin sedikit pun. Ini menunjukkan tulang tersebut telah terkubur tak lebih dari beberapa tahun yang lalu. Sedangkan tengkoraknya, yang mengandung sejumlah kecil fluorin, menunjukkan umurnya hanya beberapa ribu tahun.

Penelitian lebih lanjut mengungkapkan bahwa Manusia Piltdown merupakan penipuan ilmiah terbesar dalam sejarah. Ini adalah tengkorak buatan; tempurungnya berasal dari seorang lelaki yang hidup 500 tahun yang lalu, dan tulang rahangnya adalah milik seekor kera yang belum lama mati! Kemudian gigi-giginya disusun dengan rapi dan ditambahkan pada rahang tersebut, dan persendi-annya diisi agar menyerupai pada manusia. Kemudian seluruh bagian ini diwarnai dengan potasium dikromat untuk memberinya penampakan kuno.

Le Gros Clark, salah seorang anggota tim yang mengungkap pemalsuan ini, tidak mampu menyembunyikan keterkejutannya dan mengatakan: "bukti-bukti abrasi tiruan segera tampak di depan mata. Ini terlihat sangat jelas sehingga perlu dipertanyakan - bagaimana hal ini dapat luput dari penglihatan sebelumnya?" (Stephen Jay Gould, "Smith Woodward's Folly", New Scientist, 5 April 1979, hal. 44) Ketika kenyataan ini terungkap, "Manusia Piltdown" dengan segera dikeluarkan dari British Museum yang telah memamerkannya selama lebih dari 40 tahun.

Manusia Piltdown merupakan pemalsuan yang dilakukan dengan merekatkan rahang kera pada tengkorak manusia

Skandal Piltdown dengan jelas memperlihat-kan bahwa tidak ada yang dapat menghentikan para evolusionis dalam rangka membuktikan teori-teori mereka. Bahkan, skandal ini menunjukkan para evolusionis tidak memiliki penemuan apa pun yang mendukung teori mereka. Karena mereka tidak memiliki bukti apa pun, mereka memilih untuk membuatnya sendiri.

KEKELIRUAN PEMIKIRAN TENTANG REKAPITULASI

Teori Haeckel ini menganggap bahwa embrio hidup mengalami ulangan proses evolusi seperti yang dialami moyang-palsunya. Haeckel berteori bahwa selama perkembangan di dalam rahim ibunya, embrio manusia kali pertama memperlihatkan sifat-sifat seekor ikan, lalu reptil, dan akhirnya manusia.

Sejak itu telah dibuktikan bahwa teori ini sepenuhnya omong kosong. Kini telah diketahui bahwa “insang-insang” yang disangka muncul pada tahap-tahap awal embrio manusia ternyata adalah taraf-taraf awal saluran telinga dalam, kelenjar paratiroid, dan kelenjar gondok. Bagian embrio yang diserupakan dengan “kantung kuning telur” ternyata kantung yang menghasilkan darah bagi si janin. Bagian yang dikenali sebagai “ekor” oleh Haeckel dan para pengikutnya sebenarnya tulang belakang, yang mirip ekor hanya karena tumbuh mendahului kaki.

Inilah fakta-fakta yang diterima luas di dunia lmiah, dan bahkan telah diterima oleh para evolusionis sendiri. Dua pemimpin neo-Darwinis, George Gaylord Simpson dan W. Beck telah mengakui:

Haeckel keliru menyatakan azas evolusi yang terlibat. Kini telah benar-benar diyakini bahwa ontogeni tidak mengulangi filogeni

Segi menarik lain dari “rekapitulasi” adalah Ernst Haeckel sendiri, seorang pemalsu yang mereka-reka gambar-gambar demi mendukung teori yang diajukannya. Pemalsuan Haeckel bermaksud menunjukkan bahwa embrio-embrio ikan dan manusia mirip satu sama lain.

Pada terbitan 5 September 1997 majalah ilmiah Science, sebuah artikel diterbitkan yang mengungkapkan bahwa gambar-gambar embrio Haeckel adalah karya penipuan. Artikel berjudul “Haeckel’s Embryos: Fraud Rediscovered” (Embrio-embrio Haeckel: Mengungkap Ulang Sebuah Penipuan) ini mengatakan:

Kesan yang dipancarkan [gambar-gambar Haeckel] itu, bahwa embrio-embrio persis serupa, adalah keliru, kata Michael Richardson, seorang ahli embriologi pada St. George’s Hospital Medical School di London… Maka, ia dan para sejawatnya melakukan penelitian perbandingan, memeriksa kembali dan memfoto embrio-embrio yang secara kasar sepadan spesies dan umurnya dengan yang dilukis Haeckel. Sim salabim dan perhatikan! Embrio-embrio “sering dengan mengejutkan tampak berbeda,” lapor Richardson dalam Anatomy and Embryology terbitan Agustus [1997].

Pada terbitan 5 September 1997, majalah terkemuka Science menyajikan sebuah artikel yang menyingkapkan bahwa gambar-gambar embrio milik Haeckel telah dipalsukan. Artikel ini menggambarkan bagaimana embrio-embrio sebenarnya sangat berbeda satu sama lain...

Penelitian di tahun-tahun terakhir telah menunjukkan bahwa embrio-embrio dari spesies yang berbeda tidak saling mirip, seperti yang ditunjukkan Haeckel. Perbedaan besar di antara embrio-embrio mamalia, reptil, dan kelelawar di atas adalah contoh nyata hal ini

Science menjelaskan bahwa, demi menunjukkan bahwa embrio-embrio memiliki kemiripan, Haeckel sengaja menghilangkan beberapa organ dari gambar-gambarnya atau menambahkan organ-organ khayalan. Belakangan, di dalam artikel yang sama, informasi berikut ini diungkapkan:

Bukan hanya menambahkan atau mengurangi ciri-ciri, lapor Richardson dan para sejawatnya, namun Haeckel juga mengubah-ubah ukuran untuk membesar-besarkan kemiripan di antara spesies-spesies, bahkan ketika ada perbedaan 10 kali dalam ukuran. Haeckel mengaburkan perbedaan lebih jauh dengan lalai menamai spesies dalam banyak kesempatan, seakan satu wakil sudah cermat bagi keseluruhan kelompok hewan. Dalam kenyataannya, Richardson dan para sejawatnya mencatat, bahkan embrio-embrio hewan yang berkerabat dekat seperti ikan cukup beragam dalam penampakan dan urutan perkembangannya.

Artikel Science membahas bagaimana pengakuan-pengakuan Haeckel atas masalah ini ditutup-tutupi sejak awal abad ke-20, dan bagaimana gambar-gambar palsu ini mulai disajikan sebagai fakta ilmiah di dalam buku-buku acuan:

Pengakuan Haeckel lenyap setelah gambar-gambarnya kemudian digunakan dalam sebuah buku tahun 1901 berjudul Darwin and After Darwin (Darwin dan Sesudahnya) dan dicetak ulang secara luas di dalam buku-buku acuan biologi berbahasa Inggris.

Singkatnya, fakta bahwa gambar-gambar Haeckel dipalsukan telah muncul di tahun 1901, tetapi seluruh dunia ilmu pengetahuan terus diperdaya olehnya selama satu abad.

TATKALA MANUSIA MENCARI NENEK MOYANGNYA

Walaupun para evolusionis tidak berhasil menemukan bukti ilmiah untuk mendukung teori mereka, mereka sangat berhasil dalam satu hal: propaganda. Unsur paling penting dari propaganda ini adalah gambar-gambar palsu dan bentuk tiruan yang dikenal dengan "rekonstruksi".

Rekonstruksi dapat diartikan sebagai membuat lukisan atau membangun model makhluk hidup berdasarkan satu potong tulang yang ditemukan dalam penggalian. "Manusia-manusia kera" yang kita lihat di koran, majalah atau film semuanya adalah rekonstruksi.

Ketika mereka tidak mampu menemukan makhluk "setengah manusia setengah kera" dalam catatan fosil, mereka memilih membohongi masyarakat dengan membuat gambar-gambar palsu.

Persis seperti pernyataan evolusionis yang lain tentang asal-usul makhluk hidup, pernyataan mereka tentang asal-usul manusia pun tidak memiliki landasan ilmiah. Berbagai penemuan menunjukkan bahwa "evolusi manusia" hanyalah dongeng belaka.

Darwin mengemukakan pernyataannya bahwa manusia dan kera berasal dari satu nenek moyang yang sama dalam bukunya The Descent of Man yang terbit tahun 1971. Sejak saat itu, para pengikut Darwin telah berusaha untuk memperkuat kebenaran pernyataan tersebut. Tetapi, walaupun telah melakukan berbagai penelitian, pernyataan "evolusi manusia" belum pernah dilandasi oleh penemuan ilmiah yang nyata, khususnya di bidang fosil.

Penemuan ini jelas menunjukkan pendapat tentang sifat-sifat perolehan yang terkumpul dari satu keturunan ke turunan berikutnya, sehingga memunculkan spesies baru, tidaklah mungkin. Dengan kata lain, mekanisme seleksi alam rumusan Darwin tidak berkemampuan mendorong terjadinya evolusi. Jadi, teori evolusi Darwin sesungguhnya telah ambruk sejak awal di abad ke-20 dengan ditemukannya ilmu genetika. Segala upaya lain dari para pendukung evolusi di abad ke-20 selalu gagal.

Teori evolusi menyatakan bahwa kelompok makhluk hidup yang berbeda-beda (filum) terbentuk dan berkembang dari satu nenek moyang bersama, dan berubah menjadi bentuk yang semakin berbeda satu sama lain seiring berlalunya waktu. Gambar paling atas menampilkan pernyataan ini, yang dapat digambarkan menyerupai proses percabangan pohon. Namun, fakta catatan fosil malah membuktikan kebalikannya. Sebagaimana diperlihatkan gambar paling bawah, beragam kelompok makhluk hidup muncul serentak dan tiba-tiba dengan ciri tubuh masing-masing yang khas. Sekitar 100 filum mendadak muncul di zaman Kambrium. Setelah itu, jumlah mereka menurun (karena punahnya sejumlah filum) , dan bukannya meningkat.

YANG TERSEMBUNYI DI BALIK PERCOBAAN MILLER

Penelitian yang paling diterima luas tentang asal usul kehidupan adalah percobaan yang dilakukan peneliti Amerika, Stanley Miller, di tahun 1953. (Percobaan ini juga dikenal sebagai “percobaan Urey-Miller” karena sumbangsih pembimbing Miller di University of Chicago, Harold Urey). Percobaan inilah satu-satunya “bukti” milik para evolusionis yang digunakan untuk membuktikan pendapat tentang “evolusi kimiawi”. Mereka mengemukakannya sebagai tahapan awal proses evolusi yang mereka yakini, yang akhirnya memunculkan kehidupan.

Melalui percobaan, Stanley Miller bertujuan membuktikan bahwa di bumi yang tak berkehidupan miliaran tahun lalu, asam amino, satuan molekul pembentuk protein, dapat terbentuk dengan sendirinya secara alamiah tanpa campur tangan sengaja apa pun di luar kekuatan alam. Dalam percobaannya, Miller menggunakan campuran gas yang ia yakini terdapat pada bumi purba (yang kemudian terbukti tidak tepat). Campuran ini terdiri dari gas amonia, metana, hidrogen, dan uap air. Karena gas-gas ini takkan saling bereaksi dalam lingkungan alamiah, ia menambahkan energi ke dalamnya untuk memicu reaksi antar gas-gas tersebut. Dengan beranggapan energi ini dapat berasal dari petir pada atmosfer purba, ia menggunakan arus listrik untuk tujuan tersebut.

Atmosfer purba yang Miller coba tiru dalam percobaannya tidaklah sesuai dengan kenyataan. Di tahun 1980-an, para ilmuwan sepakat bahwa seharusnya gas nitrogen dan karbon dioksidalah yang digunakan dalam lingkungan buatan itu dan bukan metana serta amonia.

Ilmuwan Amerika, J. P. Ferris dan C. T. Chen mengulangi percobaan Miller dengan menggunakan lingkungan atmosfer yang berisi karbon dioksida, hidrogen, nitrogen, dan uap air; dan mereka tidak mampu mendapatkan bahkan satu saja molekul asam amino. (J. P. Ferris, C. T. Chen, "Photochemistry of Methane, Nitrogen, and Water Mixture As a Model for the Atmosphere of the Primitive Earth," Journal of American Chemical Society, vol. 97:11, 1975, h. 2964.)

Terdapat sejumlah temuan yang menunjukkan bahwa kadar oksigen di atmosfer kala itu jauh lebih tinggi daripada yang sebelumnya dinyatakan para evolusionis. Berbagai penelitian juga menunjukkan, jumlah radiasi ultraviolet yang kala itu mengenai bumi adalah 10.000 lebih tinggi daripada perkiraan para evolusionis. Radiasi kuat ini dipastikan telah membebaskan oksigen dengan cara menguraikan uap air dan karbon dioksida di atmosfer.

Keadaan ini sama sekali bertentangan dengan percobaan Miller, di mana oksigen sama sekali diabaikan. Jika oksigen digunakan dalam percobaannya, metana akan teruraikan menjadi karbon dioksida dan air, dan amonia akan menjadi nitrogen dan air. Sebaliknya, di lingkungan bebas oksigen, takkan ada pula lapisan ozon; sehingga asam-asam amino akan segera rusak karena terkena sinar ultraviolet yang paling kuat tanpa perlindungan dari lapisan ozon. Dengan kata lain, dengan atau tanpa oksigen di bumi purba, hasilnya adalah lingkungan mematikan yang bersifat merusak bagi asam amino.

Anehnya, mengapa percobaan Miller masih saja dimuat di buku-buku pelajaran dan dianggap sebagai bukti penting asal usul kehidupan secara kimiawi? Ini sekali lagi menunjukkan betapa evolusi bukanlah teori ilmiah, melainkan keyakinan buta yang tetap dipertahankan meskipun bukti menunjukkan hal sebaliknya.

Kalangan masyarakat awam adalah yang umumnya tidak mengetahui kenyataan ini, dan menganggap pernyataan evolusi manusia didukung oleh berbagai bukti kuat. Anggapan yang salah tersebut terjadi karena masalah ini seringkali dibahas di media masa dan disampaikan sebagai fakta yang telah terbukti. Tetapi mereka yang benar-benar ahli di bidang ini mengetahui bahwa kisah "evolusi manusia" tidak memiliki dasar ilmiah.

Januari 13, 2012

cara membuat form dengan sencha touch

Sencha Touch adalah kerangka HTML5 untuk pengembangan aplikasi berbasis sentuhan.

Sebuah aplikasi yang ditulis menggunakan kerangka kerja ini dapat dijalankan-tanpa ada perubahan-di IOS, Android,

dan Blackberry perangkat berbasis sentuhan. Kerangka kerja ini datang bersama dengan berbagai built-in UI

komponen mirip dengan orang yang kita lihat pada platform mobile yang berbeda, dirancang dengan baik

dengan paket data yang efisien untuk bekerja dengan beragam sumber data sisi klien atau server-side.

Selain itu, kerangka kerja ini juga menawarkan API untuk bekerja dengan DOM dan itu membawa pada

diperpanjang untuk setiap aspek , yang merupakan kombinasi yang setiap perusahaan mencari

dan mengharapkan kerangka kerja.

yang dilakukan pertama kali adalah

1.buka teks editor

2.tulis source codenya berikut ini:

Ext.setup({

onReady: function() {

var form;

//form and related fields config

var formBase = {

//enable vertical scrolling in case the form exceeds the page height

scroll:'vertical',

url: 'http://localhost/test.php',

items: [{//add a fieldset

xtype: 'fieldset',

title: 'Personal Info',

instructions: 'Please enter the information above.',

//apply the common settings to all the child items of the fieldset

defaults: {

required: true,

//required field

labelAlign: 'left',

labelWidth: '40%'

},

items: [

{//add a text field

xtype: 'textfield',

name : 'name',

label: 'Name',

useClearIcon: true,//shows the clear icon in the field when user types

autoCapitalize : false

}, {//add a password field

xtype: 'passwordfield',

name : 'password',

label: 'Password',

useClearIcon: false

}, {

xtype: 'passwordfield',

name : 'reenter',

label: 'Re-enter Password',

useClearIcon: true

}, {//add an email field

xtype: 'emailfield',

name : 'email',

label: 'Email',

placeHolder: 'you@sencha.com',

useClearIcon: true

}]

}

],

listeners : {

//listener if the form is submitted, successfully

submit : function(form, result){

console.log('success', Ext.toArray(arguments));

},

//listener if the form submission fails

exception : function(form, result){

console.log('failure', Ext.toArray(arguments));

}

},

//items docked to the bottom of the form

dockedItems: [

{

xtype: 'toolbar',

dock: 'bottom',

items: [

{

text: 'Reset',

handler: function() {

form.reset(); //reset the fields

}

},

{

text: 'Save',

ui: 'confirm',

handler: function() {

//submit the form data to the url

form.submit();

}

}

]

}

]

};

if (Ext.is.Phone) {

formBase.fullscreen = true;

} else { //if desktop

Ext.apply(formBase, {

autoRender: true,

floating: true,

modal: true,

centered: true,

hideOnMaskTap: false,

height: 385,

width: 480

});

}

//create form panel

form = new Ext.form.FormPanel(formBase);

form.show(); //render the form to the body

}

});

3simpan file dengan nama yang kamu sukai jangan lupa belakangnya .js kalo aku memilih ch106.js

4 sisipkan kedalam html

contohnya seperti ini

simpan dengan terserah kamu pilih yang mana dengan diahiri .html kalo aku milih sc2.html

lalu jalankan dengan browser di emulator, atau diberikan ke server lalu di test dengan entah itu hp android, tablet blackbery, ataupun iphone dan ipad kalau terpaksa ga ada pake aja google chrome

inilah hasilnya:

Januari 04, 2012

inilah kalkulator

prototype kalkulator

inilah prototype kalkulator scientificq, sebenarnya masih perlu penambahan untuk sementara cukup dulu

Januari 03, 2012

history of internet

The history of the Internet began with the development of computers in the 1950s. This began with point-to-point communication between mainframe computers and terminals, expanded to point-to-point connections between computers and then early research into packet switching. Packet switched networks such as ARPANET, Mark I at NPL in the UK, CYCLADES, Merit Network, Tymnet, and Telenet, were developed in the late 1960s and early 1970s using a variety of protocols. The ARPANET in particular led to the development of protocols for internetworking, where multiple separate networks could be joined together into a network of networks.

In 1982 the Internet Protocol Suite (TCP/IP) was standardized and the concept of a world-wide network of fully interconnected TCP/IP networks called the Internet was introduced. Access to the ARPANET was expanded in 1981 when the National Science Foundation (NSF) developed the Computer Science Network (CSNET) and again in 1986 when NSFNET provided access to supercomputer sites in the United States from research and education organizations. Commercial internet service providers (ISPs) began to emerge in the late 1980s and 1990s. The ARPANET was decommissioned in 1990. The Internet was commercialized in 1995 when NSFNET was decommissioned, removing the last restrictions on the use of the Internet to carry commercial traffic.

Since the mid-1990s the Internet has had a drastic impact on culture and commerce, including the rise of near-instant communication by electronic mail, instant messaging, Voice over Internet Protocol (VoIP) "phone calls", two-way interactive video calls, and the World Wide Web with its discussion forums, blogs, social networking, and online shopping sites. The research and education community continues to develop and use advanced networks such as NSF's very high speed Backbone Network Service (vBNS), Internet2, and National LambdaRail. Increasing amounts of data are transmitted at higher and higher speeds over fiber optic networks operating at 1-Gbit/s, 10-Gbit/s, or more. The Internet continues to grow, driven by ever greater amounts of online information and knowledge, commerce, entertainment and social networking.

It is estimated that in 1993 the Internet carried only 1% of the information flowing through two-way telecommunication. By 2000 this figure had grown to 51%, and by 2007 more than 97% of all telecommunicated information was carried over the Internet.

The Internet has precursors that date back to the 19th century, especially the telegraph system, more than a century before the digital Internet became widely used in the second half of the 1990s. The concept of data communication – transmitting data between two different places, connected via some kind of electromagnetic medium, such as radio or an electrical wire – predates the introduction of the first computers. Such communication systems were typically limited to point to point communication between two end devices. Telegraph systems and telex machines can be considered early precursors of this kind of communication.

Early computers used the technology available at the time to allow communication between the central processing unit and remote terminals. As the technology evolved, new systems were devised to allow communication over longer distances (for terminals) or with higher speed (for interconnection of local devices) that were necessary for the mainframe computer model. Using these technologies it was possible to exchange data (such as files) between remote computers. However, the point to point communication model was limited, as it did not allow for direct communication between any two arbitrary systems; a physical link was necessary. The technology was also deemed as inherently unsafe for strategic and military use, because there were no alternative paths for the communication in case of an enemy attack.

Three terminals and an ARPA

Main articles: RAND Corporation and ARPANET

A fundamental pioneer in the call for a global network, J. C. R. Licklider, articulated the ideas in his January 1960 paper, Man-Computer Symbiosis.

"A network of such [computers], connected to one another by wide-band communication lines [which provided] the functions of present-day libraries together with anticipated advances in information storage and retrieval and [other] symbiotic functions."

—J.C.R. Licklider,

In August 1962, Licklider and Welden Clark published the paper "On-Line Man Computer Communication", one of the first descriptions of a networked future.

In October 1962, Licklider was hired by Jack Ruina as Director of the newly established Information Processing Techniques Office (IPTO) within DARPA, with a mandate to interconnect the United States Department of Defense's main computers at Cheyenne Mountain, the Pentagon, and SAC HQ. There he formed an informal group within DARPA to further computer research. He began by writing memos describing a distributed network to the IPTO staff, whom he called "Members and Affiliates of the Intergalactic Computer Network". As part of the information processing office's role, three network terminals had been installed: one for System Development Corporation in Santa Monica, one for Project Genie at the University of California, Berkeley and one for the Compatible Time-Sharing System project at the Massachusetts Institute of Technology (MIT). Licklider's identified need for inter-networking would be made obvious by the apparent waste of resources this caused.

"For each of these three terminals, I had three different sets of user commands. So if I was talking online with someone at S.D.C. and I wanted to talk to someone I knew at Berkeley or M.I.T. about this, I had to get up from the S.D.C. terminal, go over and log into the other terminal and get in touch with them. [...] I said, it's obvious what to do (But I don't want to do it): If you have these three terminals, there ought to be one terminal that goes anywhere you want to go where you have interactive computing. That idea is the ARPAnet."

—Robert W. Taylor, co-writer with Licklider of "The Computer as a Communications Device", in an interview with The New York Times,

Although he left the IPTO in 1964, five years before the ARPANET went live, it was his vision of universal networking that provided the impetus that led his successors such as Lawrence Roberts and Robert Taylor to further the ARPANET development. Licklider later returned to lead the IPTO in 1973 for two years.

Packet switching

Main article: Packet switching

At the tip of the problem lay the issue of connecting separate physical networks to form one logical network. During the 1960s, Paul Baran (RAND Corporation), produced a study of survivable networks for the US military. Information transmitted across Baran's network would be divided into what he called 'message-blocks'. Independently, Donald Davies (National Physical Laboratory, UK), proposed and developed a similar network based on what he called packet-switching, the term that would ultimately be adopted. Leonard Kleinrock (MIT) developed mathematical theory behind this technology. Packet-switching provides better bandwidth utilization and response times than the traditional circuit-switching technology used for telephony, particularly on resource-limited interconnection links.

Packet switching is a rapid store-and-forward networking design that divides messages up into arbitrary packets, with routing decisions made per-packet. Early networks used message switched systems that required rigid routing structures prone to single point of failure. This led Tommy Krash and Paul Baran's U.S. military funded research to focus on using message-blocks to include network redundancy, which in turn led to the widespread urban legend that the Internet was designed to resist nuclear attack.

Networks that led to the Internet

ARPANET

Main article: ARPANET

Len Kleinrock and the first Interface Message Processor.

Promoted to the head of the information processing office at DARPA, Robert Taylor intended to realize Licklider's ideas of an interconnected networking system. Bringing in Larry Roberts from MIT, he initiated a project to build such a network. The first ARPANET link was established between the University of California, Los Angeles and the Stanford Research Institute on 22:30 hours on October 29, 1969.

"We set up a telephone connection between us and the guys at SRI ...", Kleinrock ... said in an interview: "We typed the L and we asked on the phone,

"Do you see the L?"

"Yes, we see the L," came the response.

We typed the O, and we asked, "Do you see the O."

"Yes, we see the O."

Then we typed the G, and the system crashed ...

Yet a revolution had begun" ....

By December 5, 1969, a 4-node network was connected by adding the University of Utah and the University of California, Santa Barbara. Building on ideas developed in ALOHAnet, the ARPANET grew rapidly. By 1981, the number of hosts had grown to 213, with a new host being added approximately every twenty days.

ARPANET became the technical core of what would become the Internet, and a primary tool in developing the technologies used. ARPANET development was centered around the Request for Comments (RFC) process, still used today for proposing and distributing Internet Protocols and Systems. RFC 1, entitled "Host Software", was written by Steve Crocker from the University of California, Los Angeles, and published on April 7, 1969. These early years were documented in the 1972 film Computer Networks: The Heralds of Resource Sharing.

International collaborations on ARPANET were sparse. For various political reasons, European developers were concerned with developing the X.25 networks. Notable exceptions were the Norwegian Seismic Array (NORSAR) in 1972, followed in 1973 by Sweden with satellite links to the Tanum Earth Station and Peter Kirstein's research group in the UK, initially at the Institute of Computer Science, London University and later at University College London.

NPL

In 1965, Donald Davies of the National Physical Laboratory (United Kingdom) proposed a national data network based on packet-switching. The proposal was not taken up nationally, but by 1970 he had designed and built the Mark I packet-switched network to meet the needs of the multidisciplinary laboratory and prove the technology under operational conditions. By 1976 12 computers and 75 terminal devices were attached and more were added until the network was replaced in 1986.

Merit Network

The Merit Network was formed in 1966 as the Michigan Educational Research Information Triad to explore computer networking between three of Michigan's public universities as a means to help the state's educational and economic development. With initial support from the State of Michigan and the National Science Foundation (NSF), the packet-switched network was first demonstrated in December 1971 when an interactive host to host connection was made between the IBM mainframe computer systems at the University of Michigan in Ann Arbor and Wayne State University in Detroit. In October 1972 connections to the CDC mainframe at Michigan State University in East Lansing completed the triad. Over the next several years in addition to host to host interactive connections the network was enhanced to support terminal to host connections, host to host batch connections (remote job submission, remote printing, batch file transfer), interactive file transfer, gateways to the Tymnet and Telenet public data networks, X.25 host attachments, gateways to X.25 data networks, Ethernet attached hosts, and eventually TCP/IP and additional public universities in Michigan join the network.[17][18] All of this set the stage for Merit's role in the NSFNET project starting in the mid-1980s.

CYCLADES

The CYCLADES packet switching network was a French research network designed and directed by Louis Pouzin. First demonstrated in 1973, it was developed to explore alternatives to the initial ARPANET design and to support network research generally. It was the first network to make the hosts responsible for the reliable delivery of data, rather than the network itself, using unreliable datagrams and associated end-to-end protocol mechanisms.[19][20]

X.25 and public data networks

Main articles: X.25, Bulletin board system, and FidoNet

Based on ARPA's research, packet switching network standards were developed by the International Telecommunication Union (ITU) in the form of X.25 and related standards. While using packet switching, X.25 is built on the concept of virtual circuits emulating traditional telephone connections. In 1974, X.25 formed the basis for the SERCnet network between British academic and research sites, which later became JANET. The initial ITU Standard on X.25 was approved in March 1976.[21]

The British Post Office, Western Union International and Tymnet collaborated to create the first international packet switched network, referred to as the International Packet Switched Service (IPSS), in 1978. This network grew from Europe and the US to cover Canada, Hong Kong and Australia by 1981. By the 1990s it provided a worldwide networking infrastructure.[22]

Unlike ARPANET, X.25 was commonly available for business use. Telenet offered its Telemail electronic mail service, which was also targeted to enterprise use rather than the general email system of the ARPANET.

The first public dial-in networks used asynchronous TTY terminal protocols to reach a concentrator operated in the public network. Some networks, such as CompuServe, used X.25 to multiplex the terminal sessions into their packet-switched backbones, while others, such as Tymnet, used proprietary protocols. In 1979, CompuServe became the first service to offer electronic mail capabilities and technical support to personal computer users. The company broke new ground again in 1980 as the first to offer real-time chat with its CB Simulator. Other major dial-in networks were America Online (AOL) and Prodigy that also provided communications, content, and entertainment features. Many bulletin board system (BBS) networks also provided on-line access, such as FidoNet which was popular amongst hobbyist computer users, many of them hackers and amateur radio operators.[citation needed]

UUCP and Usenet

Main articles: UUCP and Usenet

In 1979, two students at Duke University, Tom Truscott and Jim Ellis, came up with the idea of using simple Bourne shell scripts to transfer news and messages on a serial line UUCP connection with nearby University of North Carolina at Chapel Hill. Following public release of the software, the mesh of UUCP hosts forwarding on the Usenet news rapidly expanded. UUCPnet, as it would later be named, also created gateways and links between FidoNet and dial-up BBS hosts. UUCP networks spread quickly due to the lower costs involved, ability to use existing leased lines, X.25 links or even ARPANET connections, and the lack of strict use policies (commercial organizations who might provide bug fixes) compared to later networks like CSnet and Bitnet. All connects were local. By 1981 the number of UUCP hosts had grown to 550, nearly doubling to 940 in 1984. – Sublink Network, operating since 1987 and officially founded in Italy in 1989, based its interconnectivity upon UUCP to redistribute mail and news groups messages throughout its Italian nodes (about 100 at the time) owned both by private individuals and small companies. Sublink Network represented possibly one of the first examples of the internet technology becoming progress through popular diffusion.

Merging the networks and creating the Internet (1973–90)

TCP/IP

Map of the TCP/IP test network in February 1982

Main article: Internet Protocol Suite

With so many different network methods, something was needed to unify them. Robert E. Kahn of DARPA and ARPANET recruited Vinton Cerf of Stanford University to work with him on the problem. By 1973, they had worked out a fundamental reformulation, where the differences between network protocols were hidden by using a common internetwork protocol, and instead of the network being responsible for reliability, as in the ARPANET, the hosts became responsible. Cerf credits Hubert Zimmerman, Gerard LeLann and Louis Pouzin (designer of the CYCLADES network) with important work on this design.[24]

The specification of the resulting protocol, RFC 675 – Specification of Internet Transmission Control Program, by Vinton Cerf, Yogen Dalal and Carl Sunshine, Network Working Group, December 1974, contains the first attested use of the term internet, as a shorthand for internetworking; later RFCs repeat this use, so the word started out as an adjective rather than the noun it is today.

A Stanford Research Institute packet radio van, site of the first three-way internetworked transmission.

With the role of the network reduced to the bare minimum, it became possible to join almost any networks together, no matter what their characteristics were, thereby solving Kahn's initial problem. DARPA agreed to fund development of prototype software, and after several years of work, the first demonstration of a gateway between the Packet Radio network in the SF Bay area and the ARPANET was conducted by the Stanford Research Institute. On November 22, 1977 a three network demonstration was conducted including the ARPANET, the Packet Radio Network and the Atlantic Packet Satellite network.

Stemming from the first specifications of TCP in 1974, TCP/IP emerged in mid-late 1978 in nearly final form. By 1981, the associated standards were published as RFCs 791, 792 and 793 and adopted for use. DARPA sponsored or encouraged the development of TCP/IP implementations for many operating systems and then scheduled a migration of all hosts on all of its packet networks to TCP/IP. On January 1, 1983, known as flag day, TCP/IP protocols became the only approved protocol on the ARPANET, replacing the earlier NCP protocol.

ARPANET to the federal wide area networks: MILNET, NSI, ESNet, CSNET, and NSFNET

Main articles: ARPANET and NSFNET

BBN Technologies TCP/IP internet map early 1986

After the ARPANET had been up and running for several years, ARPA looked for another agency to hand off the network to; ARPA's primary mission was funding cutting edge research and development, not running a communications utility. Eventually, in July 1975, the network had been turned over to the Defense Communications Agency, also part of the Department of Defense. In 1983, the U.S. military portion of the ARPANET was broken off as a separate network, the MILNET. MILNET subsequently became the unclassified but military-only NIPRNET, in parallel with the SECRET-level SIPRNET and JWICS for TOP SECRET and above. NIPRNET does have controlled security gateways to the public Internet.

The networks based on the ARPANET were government funded and therefore restricted to noncommercial uses such as research; unrelated commercial use was strictly forbidden. This initially restricted connections to military sites and universities. During the 1980s, the connections expanded to more educational institutions, and even to a growing number of companies such as Digital Equipment Corporation and Hewlett-Packard, which were participating in research projects or providing services to those who were.

Several other branches of the U.S. government, the National Aeronautics and Space Agency (NASA), the National Science Foundation (NSF), and the Department of Energy (DOE) became heavily involved in Internet research and started development of a successor to ARPANET. In the mid 1980s, all three of these branches developed the first Wide Area Networks based on TCP/IP. NASA developed the NASA Science Network, NSF developed CSNET and DOE evolved the Energy Sciences Network or ESNet.

T3 NSFNET Backbone, c. 1992

NASA developed the TCP/IP based NASA Science Network (NSN) in the mid 1980s, connecting space scientists to data and information stored anywhere in the world. In 1989, the DECnet-based Space Physics Analysis Network (SPAN) and the TCP/IP-based NASA Science Network (NSN) were brought together at NASA Ames Research Center creating the first multiprotocol wide area network called the NASA Science Internet, or NSI. NSI was established to provide a totally integrated communications infrastructure to the NASA scientific community for the advancement of earth, space and life sciences. As a high-speed, multiprotocol, international network, NSI provided connectivity to over 20,000 scientists across all seven continents.

In 1981 NSF supported the development of the Computer Science Network (CSNET). CSNET connected with ARPANET using TCP/IP, and ran TCP/IP over X.25, but it also supported departments without sophisticated network connections, using automated dial-up mail exchange. Its experience with CSNET lead NSF to use TCP/IP when it created NSFNET, a 56 kbit/s backbone established in 1986, that connected the NSF supported supercomputing centers and regional research and education networks in the United States.[28] However, use of NSFNET was not limited to supercomputer users and the 56 kbit/s network quickly became overloaded. NSFNET was upgraded to 1.5 Mbit/s in 1988. The existence of NSFNET and the creation of Federal Internet Exchanges (FIXes) allowed the ARPANET to be decommissioned in 1990. NSFNET was expanded and upgraded to 45 Mbit/s in 1991, and was decommissioned in 1995 when it was replaced by backbones operated by several commercial Internet Service Providers.

Transition towards the Internet

The term "internet" was adopted in the first RFC published on the TCP protocol (RFC 675: Internet Transmission Control Program, December 1974) as an abbreviation of the term internetworking and the two terms were used interchangeably. In general, an internet was any network using TCP/IP. It was around the time when ARPANET was interlinked with NSFNET in the late 1980s, that the term was used as the name of the network, Internet, being a large and global TCP/IP network.

As interest in widespread networking grew and new applications for it were developed, the Internet's technologies spread throughout the rest of the world. The network-agnostic approach in TCP/IP meant that it was easy to use any existing network infrastructure, such as the IPSS X.25 network, to carry Internet traffic. In 1984, University College London replaced its transatlantic satellite links with TCP/IP over IPSS.[31]

Many sites unable to link directly to the Internet started to create simple gateways to allow transfer of e-mail, at that time the most important application. Sites which only had intermittent connections used UUCP or FidoNet and relied on the gateways between these networks and the Internet. Some gateway services went beyond simple email peering, such as allowing access to FTP sites via UUCP or e-mail.

Finally, the Internet's remaining centralized routing aspects were removed. The EGP routing protocol was replaced by a new protocol, the Border Gateway Protocol (BGP). This turned the Internet into a meshed topology and moved away from the centric architecture which ARPANET had emphasized. In 1994, Classless Inter-Domain Routing was introduced to support better conservation of address space which allowed use of route aggregation to decrease the size of routing tables.

TCP/IP goes global (1989–2000)

CERN, the European Internet, the link to the Pacific and beyond

Between 1984 and 1988 CERN began installation and operation of TCP/IP to interconnect its major internal computer systems, workstations, PCs and an accelerator control system. CERN continued to operate a limited self-developed system CERNET internally and several incompatible (typically proprietary) network protocols externally. There was considerable resistance in Europe towards more widespread use of TCP/IP and the CERN TCP/IP intranets remained isolated from the Internet until 1989.

In 1988 Daniel Karrenberg, from Centrum Wiskunde & Informatica (CWI) in Amsterdam, visited Ben Segal, CERN's TCP/IP Coordinator, looking for advice about the transition of the European side of the UUCP Usenet network (much of which ran over X.25 links) over to TCP/IP. In 1987, Ben Segal had met with Len Bosack from the then still small company Cisco about purchasing some TCP/IP routers for CERN, and was able to give Karrenberg advice and forward him on to Cisco for the appropriate hardware. This expanded the European portion of the Internet across the existing UUCP networks, and in 1989 CERN opened its first external TCP/IP connections.[33] This coincided with the creation of Réseaux IP Européens (RIPE), initially a group of IP network administrators who met regularly to carry out co-ordination work together. Later, in 1992, RIPE was formally registered as a cooperative in Amsterdam.

At the same time as the rise of internetworking in Europe, ad hoc networking to ARPA and in-between Australian universities formed, based on various technologies such as X.25 and UUCPNet. These were limited in their connection to the global networks, due to the cost of making individual international UUCP dial-up or X.25 connections. In 1989, Australian universities joined the push towards using IP protocols to unify their networking infrastructures. AARNet was formed in 1989 by the Australian Vice-Chancellors' Committee and provided a dedicated IP based network for Australia.

The Internet began to penetrate Asia in the late 1980s. Japan, which had built the UUCP-based network JUNET in 1984, connected to NSFNET in 1989. It hosted the annual meeting of the Internet Society, INET'92, in Kobe. Singapore developed TECHNET in 1990, and Thailand gained a global Internet connection between Chulalongkorn University and UUNET in 1992.

Global digital divide

Main articles: Global digital divide and Digital divide

While developed countries with technological infrastructures were joining the Internet, developing countries began to experience a digital divide separating them from the Internet. On an essentially continental basis, they are building organizations for Internet resource administration and sharing operational experience, as more and more transmission facilities go into place.

Africa

At the beginning of the 1990s, African countries relied upon X.25 IPSS and 2400 baud modem UUCP links for international and internetwork computer communications.

In August 1995, InfoMail Uganda, Ltd., a privately held firm in Kampala now known as InfoCom, and NSN Network Services of Avon, Colorado, sold in 1997 and now known as Clear Channel Satellite, established Africa's first native TCP/IP high-speed satellite Internet services. The data connection was originally carried by a C-Band RSCC Russian satellite which connected InfoMail's Kampala offices directly to NSN's MAE-West point of presence using a private network from NSN's leased ground station in New Jersey. InfoCom's first satellite connection was just 64 kbit/s, serving a Sun host computer and twelve US Robotics dial-up modems.

In 1996 a USAID funded project, the Leland initiative, started work on developing full Internet connectivity for the continent. Guinea, Mozambique, Madagascar and Rwanda gained satellite earth stations in 1997, followed by Côte d'Ivoire and Benin in 1998.

Africa is building an Internet infrastructure. AfriNIC, headquartered in Mauritius, manages IP address allocation for the continent. As do the other Internet regions, there is an operational forum, the Internet Community of Operational Networking Specialists.

There are a wide range of programs both to provide high-performance transmission plant, and the western and southern coasts have undersea optical cable. High-speed cables join North Africa and the Horn of Africa to intercontinental cable systems. Undersea cable development is slower for East Africa; the original joint effort between New Partnership for Africa's Development (NEPAD) and the East Africa Submarine System (Eassy) has broken off and may become two efforts.

Asia and Oceania

The Asia Pacific Network Information Centre (APNIC), headquartered in Australia, manages IP address allocation for the continent. APNIC sponsors an operational forum, the Asia-Pacific Regional Internet Conference on Operational Technologies (APRICOT).

In 1991, the People's Republic of China saw its first TCP/IP college network, Tsinghua University's TUNET. The PRC went on to make its first global Internet connection in 1994, between the Beijing Electro-Spectrometer Collaboration and Stanford University's Linear Accelerator Center. However, China went on to implement its own digital divide by implementing a country-wide content filter.

Latin America

As with the other regions, the Latin American and Caribbean Internet Addresses Registry (LACNIC) manages the IP address space and other resources for its area. LACNIC, headquartered in Uruguay, operates DNS root, reverse DNS, and other key services.

Opening the network to commerce

The interest in commercial use of the Internet became a hotly debated topic. Although commercial use was forbidden, the exact definition of commercial use could be unclear and subjective. UUCPNet and the X.25 IPSS had no such restrictions, which would eventually see the official barring of UUCPNet use of ARPANET and NSFNET connections. Some UUCP links still remained connecting to these networks however, as administrators cast a blind eye to their operation.

During the late 1980s, the first Internet service provider (ISP) companies were formed. Companies like PSINet, UUNET, Netcom, and Portal Software were formed to provide service to the regional research networks and provide alternate network access, UUCP-based email and Usenet News to the public. The first commercial dialup ISP in the United States was The World, opened in 1989.

In 1992, Congress passed the Scientific and Advanced-Technology Act, 42 U.S.C. § 1862(g), which allowed NSF to support access by the research and education communities to computer networks which were not used exclusively for research and education purposes, thus permitting NSFNET to interconnect with commercial networks.[40][41] This caused controversy within the research and education community, who were concerned commercial use of the network might lead to an Internet that was less responsive to their needs, and within the community of commercial network providers, who felt that government subsidies were giving an unfair advantage to some organizations.